AI Tools Battle 2025: Claude Judges Perplexity Pro vs ChatGPT Plus vs DeepSeek vs Gemini Pro in Epic Head-to-Head Tests

TL;DR – Best AI Tool 2025 Comparison

For deep research and accurate answers: Perplexity Pro

For creative tasks & image: Gemini Pro(nano banana)

For coding/building tools/debugging: ChatGPT Plus(preferred) and DeepSeek

Full breakdown, test cases, screenshots, and recommendations below!

(Disclaimer: These results and recommendations are based on my personal experience and additional analysis from Claude (free plan). Please do your own research and read the full post for detailed comparisons and context before making any decisions. Your needs, preferences, or results may vary.)

As we all know, that in 2025 Artificial intelligence(AI) isn’t just the buzzword or fancy term. It is becoming the new backbone of productivity for students, educators, freelancers, and creators everywhere.

But with so many new tools launching each week and calming to “transform” the way you work, finding the right one on which you can spent your money on is also a big and confusing challenge.

So I have decided to test Perplexity Pro, ChatGPT Plus, DeepSeek, and Gemini Pro myself, using the paid versions:

Perplexity Pro: $20/month

ChatGPT Plus: $20/month

Gemini Pro: $19.99/month

Deepseek: FREE (viral sensation)

(All prices current as of October 3, 2025; they may change in the future.)

For this post, you get my honest, personal opinion and extra analysis from Claude to make the comparisons even more fair.

I want to mention a very important thing, all AI tools have thier own strong points where they do better than other tools for example Some tools main power is their accuracy, some are good at doing research and some are good at brainstorming the ideas.

To test these AI tools, I used practical, real-world prompts designed to evaluate them across several key criteria, including:

Research tasks (live data retrieval, psychology theories, academic summaries)

Math problems (from simple calculations to advanced function analysis)

Equity calculations for startups

Product copywriting for SEO-optimized listings

Coding challenges (such as Canvas website quiz creation)

Image generation using creative constraints

Academic summarizing with clear formatting

If you are a student eacher, blogger, freelancer, or anyone trying to choose the right AI to spend your money on this might be the right post for your to get more clarity.

Comparison & Results

For these comparisons, I ran the same prompts across all AI platforms to keep things fair. On ChatGPT and DeepSeek, I turned on "thinking mode" for more detailed responses.

Gemini provides deep-thinking answers by default, and with Perplexity, I used its special ability to search directly from sources when needed.

This way, each platform was tested at its best for genuine, real-world results.

1) Live Data & Research

For this part I wanted to test their real-time research abilities, so I used the following prompt in each and ChatGPT and Gemini took time because I enabled thinking made.

Prompts used: Provide a comprehensive overview of the current global inflation rates as of September 2025. Include specific data for at least 5 major economies (US, EU, UK, Japan, China), explain the main factors driving inflation in each region, and cite your sources. If you cannot access current data, clearly state this limitation.

You can see the results in the screenshots below

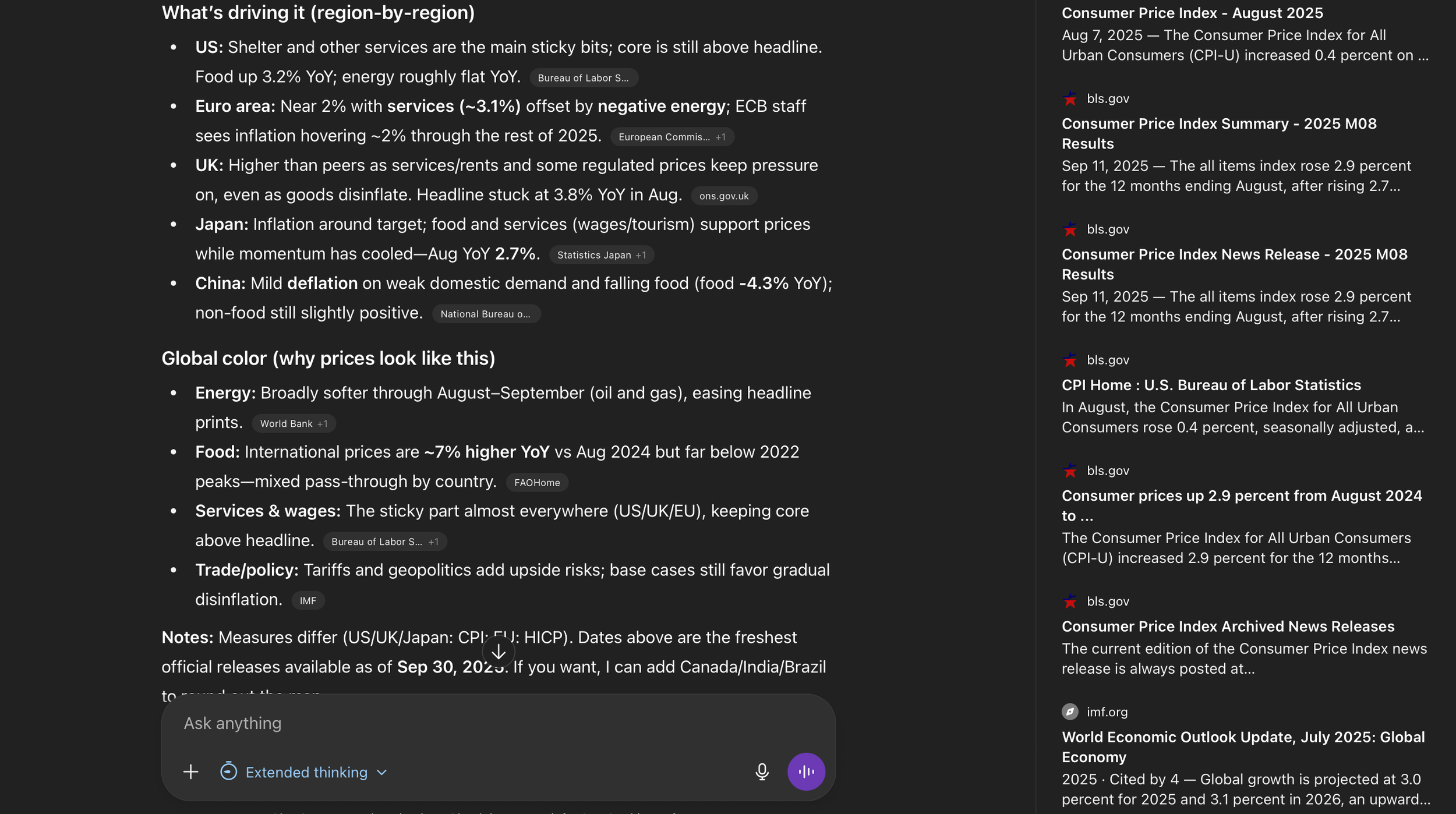

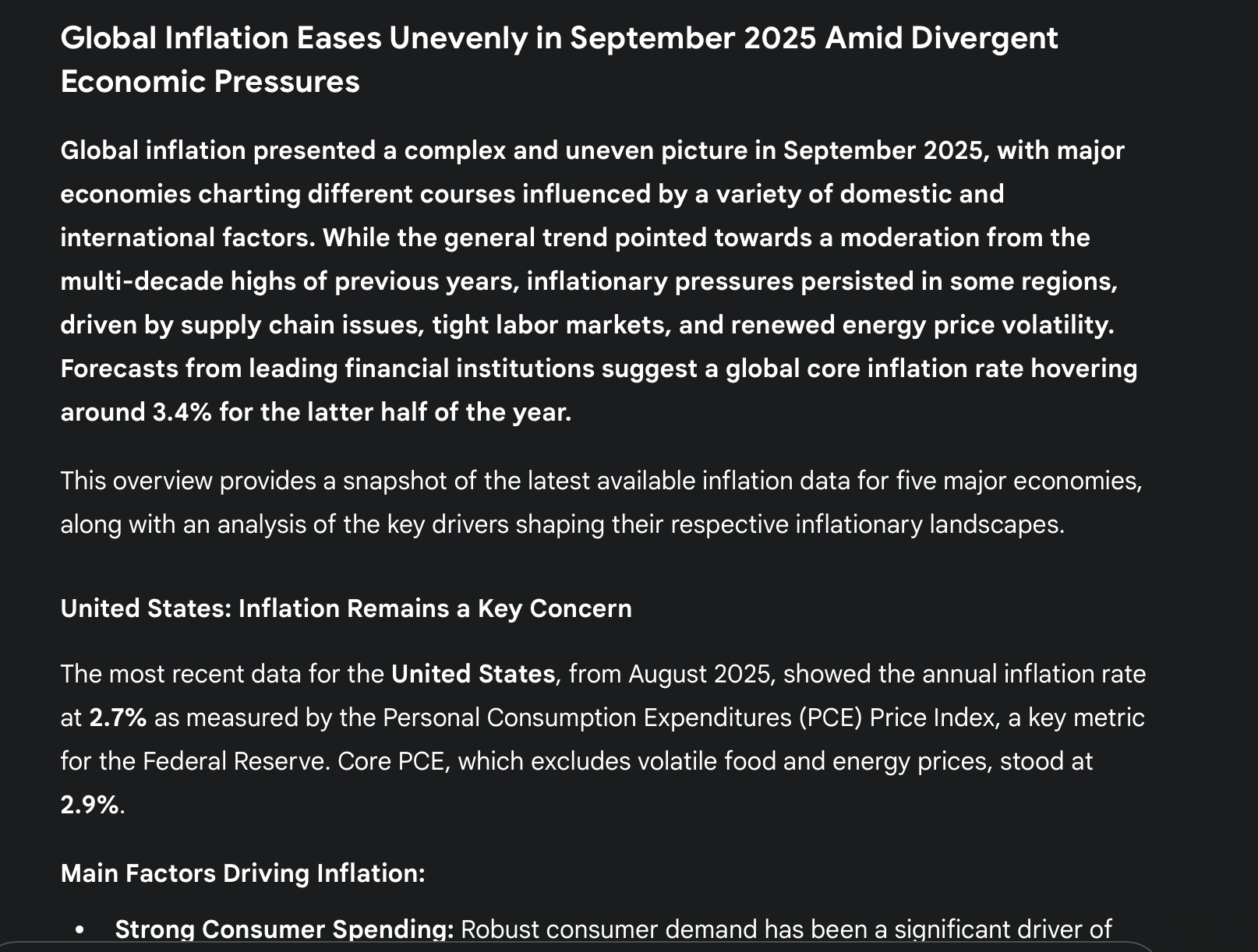

ChatGPT

Screenshot: ChatGPT Plus summarizes global inflation data (US, EU, UK, Japan, China) for September 2025

Screenshot: ChatGPT Plus summarizes global inflation data (US, EU, UK, Japan, China) for September 2025

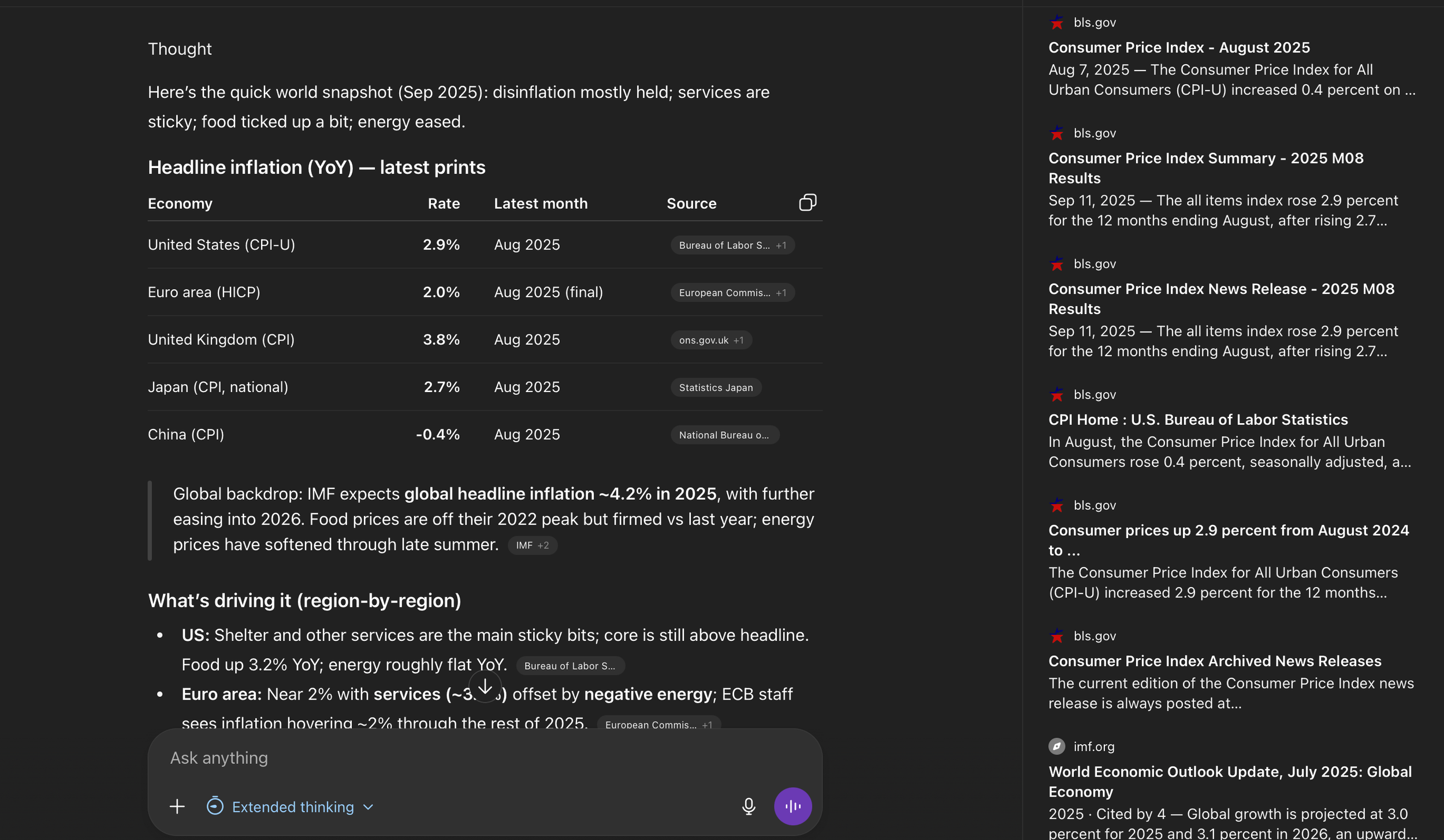

Perplexity

Screenshot: Perplexity Pro showing visual source cards and economic data for live research

Screenshot: Perplexity Pro showing visual source cards and economic data for live research

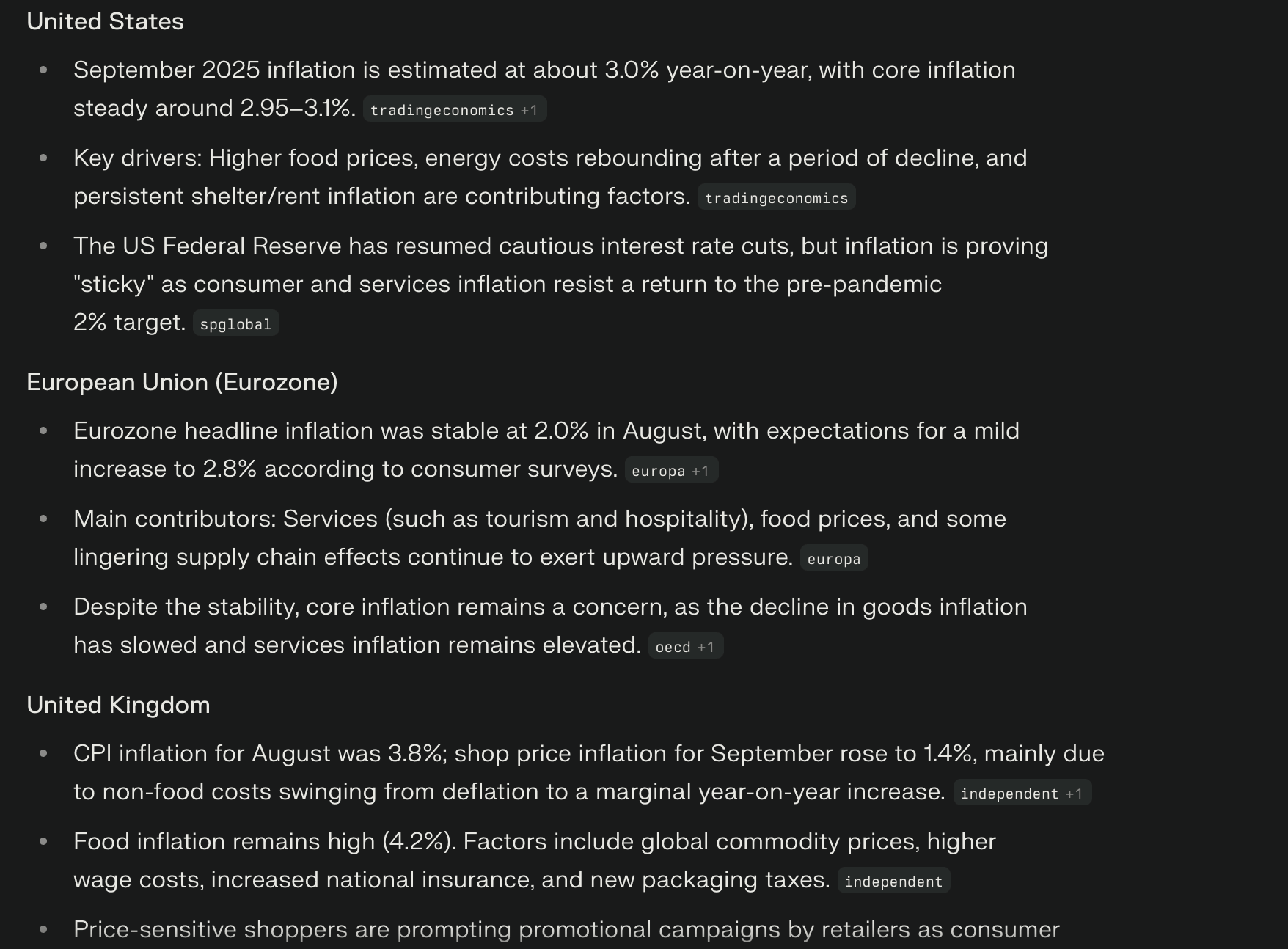

Gemini

Screenshot: Gemini explaining key global inflation trends and country breakdowns—September 2025 data

Screenshot: Gemini explaining key global inflation trends and country breakdowns—September 2025 data

DeepSeek

Screenshot: DeepSeek’s approach and limitations on inflation analysis

Results:

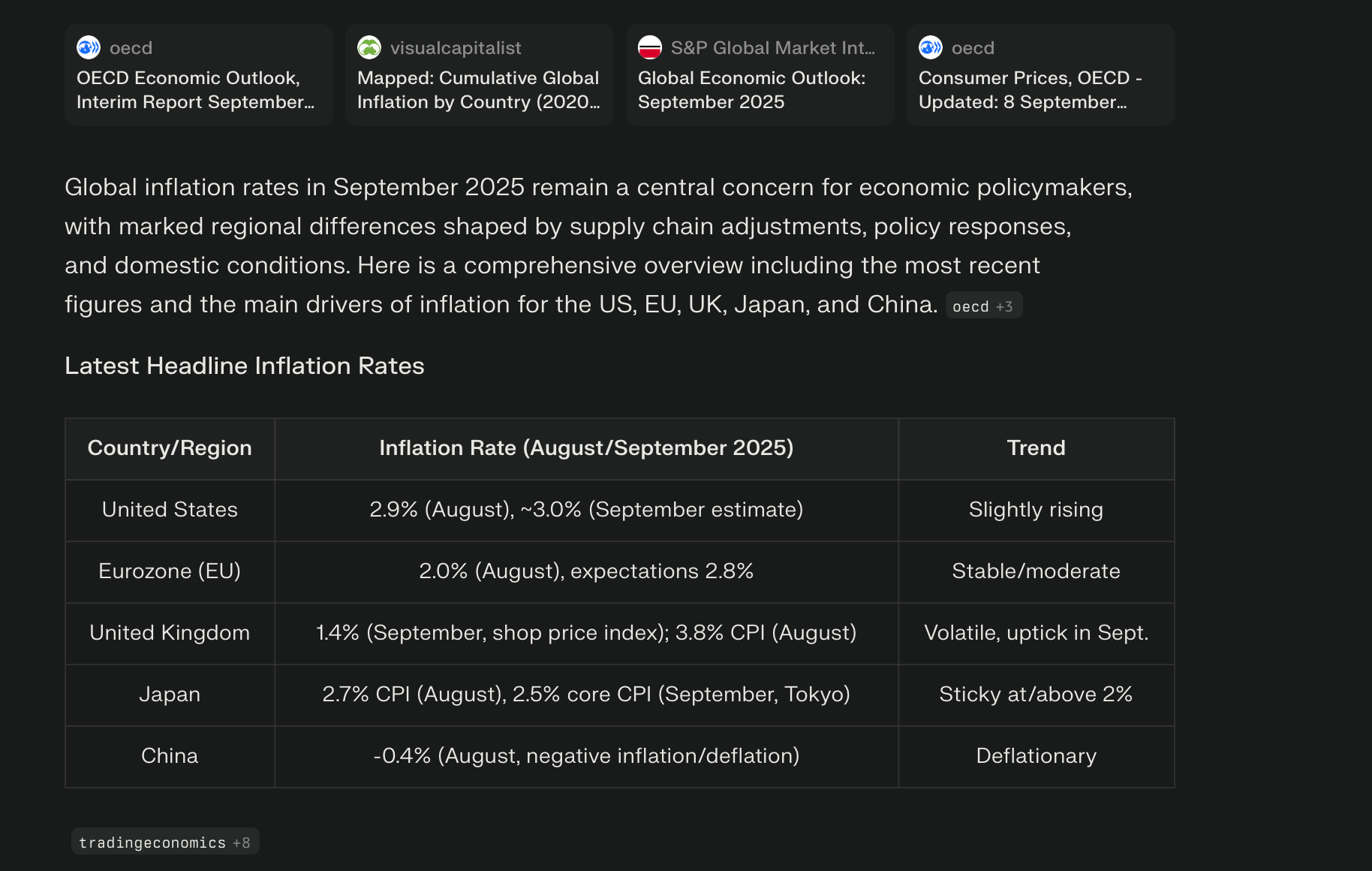

Inflation Figures Comparison (September 2025 Data)

Chatgpt Plus:

United States: 2.9% (August 2025, CPI-U)

EU (Eurozone): 2.0% (August 2025, HICP)

United Kingdom: 3.8% (August 2025, CPI)

Japan: 2.7% (August 2025, CPI national)

China: -0.4% (August 2025, CPI)

Perplexity Pro:

United States: 2.9% (August), ~3.0% (September estimate)

EU (Eurozone): 2.0% (August), expectations 2.8%

United Kingdom: 1.4% (September shop price index); 3.8% CPI (August)

Japan: 2.7% CPI (August), 2.5% core CPI (September, Tokyo)

China: -0.4% (August)

Gemini Pro:

United States: 2.7% (August 2025, PCE Price Index) - Note: Uses different measure

EU (Eurozone): 2.9% (September 2025 flash estimate)

United Kingdom: 3.8% (August 2025), potential rise to 4.0% (September forecast)

Japan: 2.7% (August 2025)

China: -0.4% (August 2025)

DeepSeek:

No option to gather real time data

As you can see all have done a really great job and doing research, I personally like perplexity’s answer the most because it uses most accurate sources to do the search.

I was not sure about technical details about financial terms, so I asked Claude to analyze the answer here is what it said.

Perplexity and ChatGPT used the correct sources - they both cited CPI-U (Consumer Price Index for All Urban Consumers) for the US, which is the standard headline inflation measure reported by the Bureau of Labor Statistics and widely used in media and policy discussions.

Gemini used PCE (Personal Consumption Expenditures), which is the Federal Reserve's preferred measure but not the standard "headline inflation rate" most people reference - this makes their 2.7% figure technically accurate but a different metric than what was requested for a general inflation comparison.

2) The Creative Copywriting Test

For the second test, I gave each AI a real business challenge. I asked them to write a 300-word product description for a new mental health smartwatch.

The target audience was health-conscious millennials. I wanted a tone that was professional but easy to approach, with clear highlights of innovations, strong privacy promises, and a call to action that really pushed people to buy.

Prompts used: Write a 300-word product description for a revolutionary smartwatch that monitors mental health through biometric data. The target audience is health-conscious millennials. The tone should be professional yet approachable, emphasizing both innovation and user privacy. Include a compelling call-to-action.

You can see the results in the screenshots below

ChatGPT

Screenshot: ChatGPT’s comprehensive product description for a biometric mental health smartwatch tailored for millennial users.

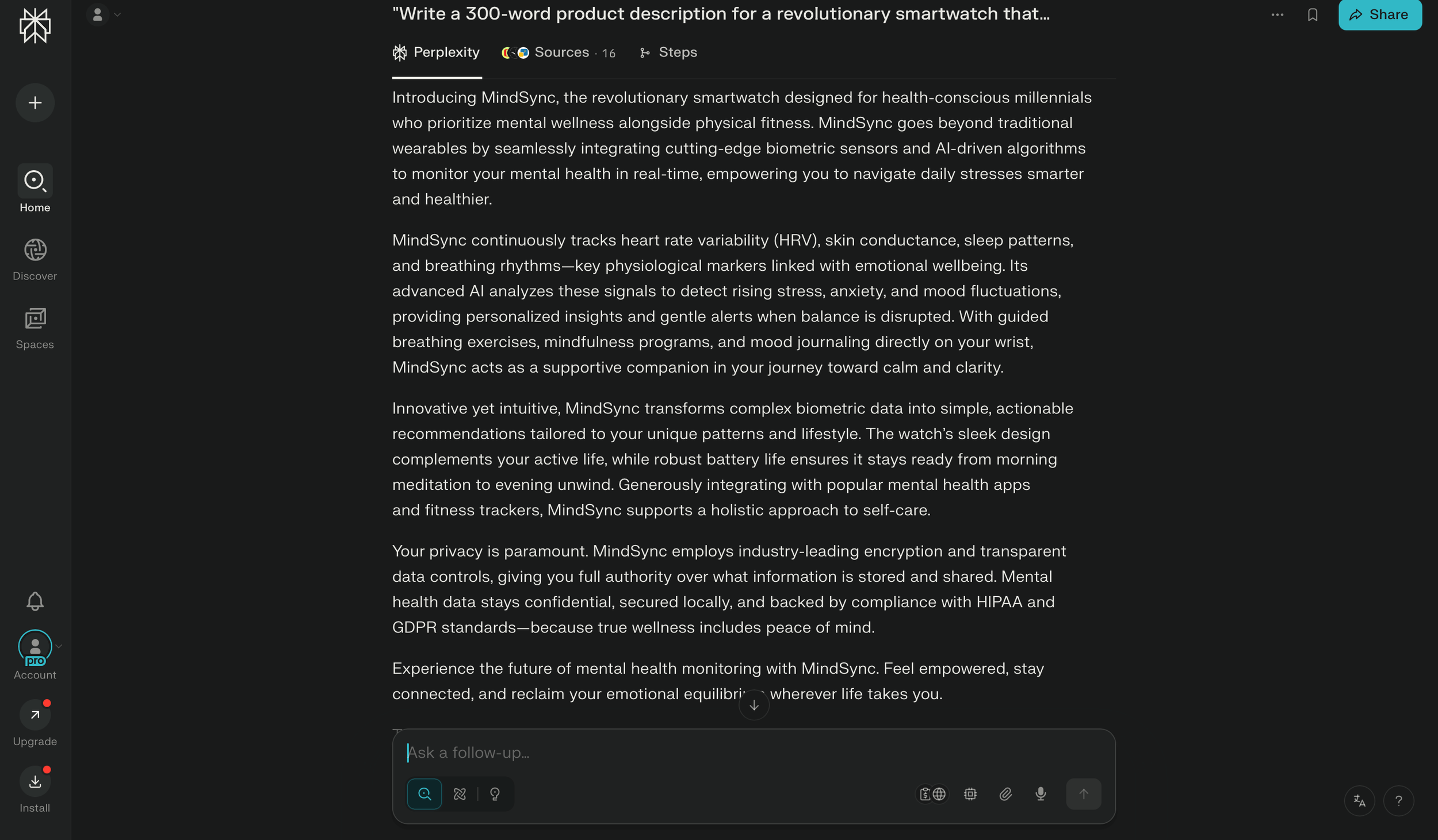

Perplexity

Screenshot: Perplexity comprehensive product description for a biometric mental health smartwatch tailored for millennial users.

Gemini

Screenshot: Gemini comprehensive product description for a biometric mental health smartwatch tailored for millennial users.

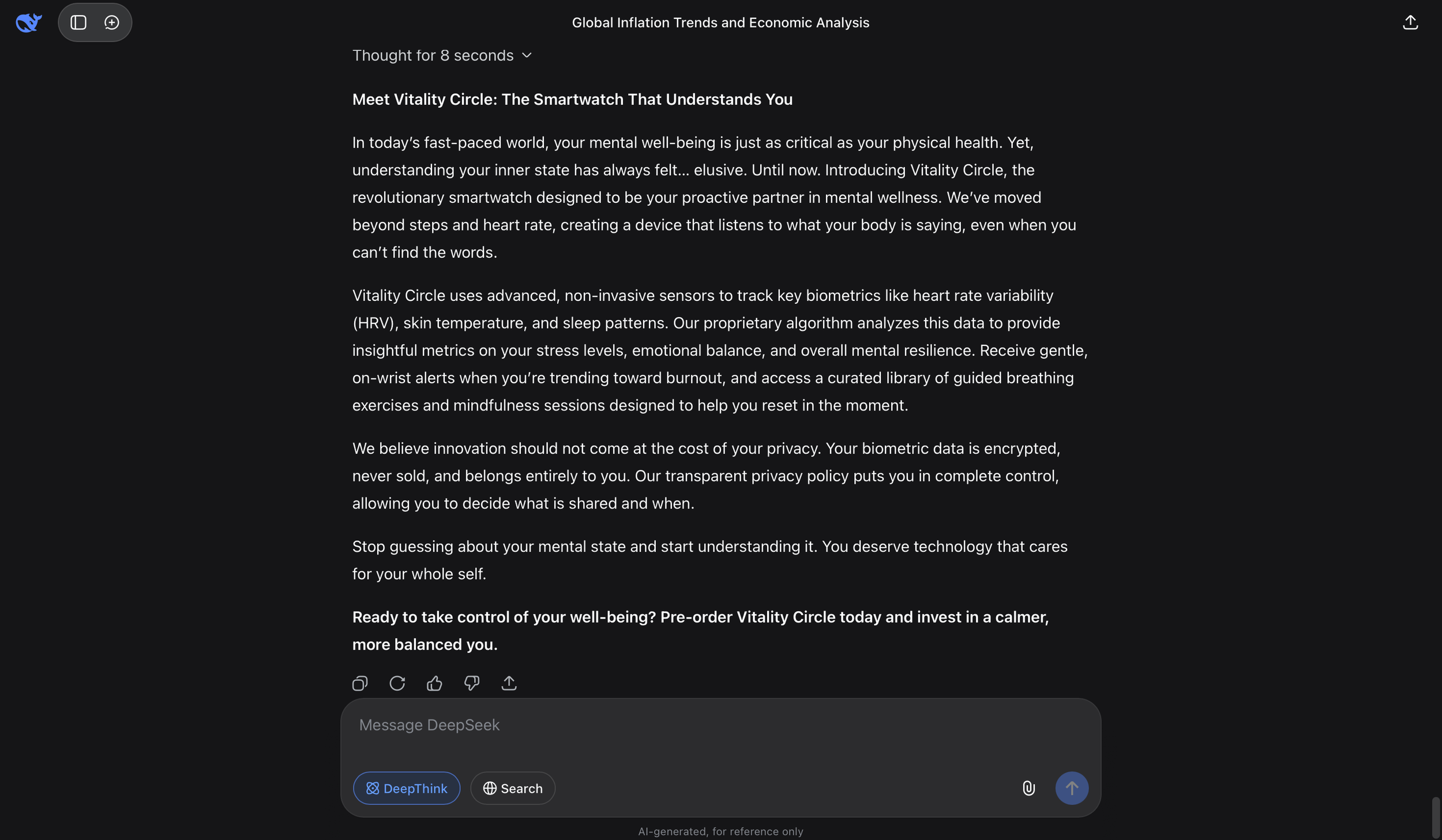

DeepSeek

Screenshot: DeepSeek comprehensive product description for a biometric mental health smartwatch tailored for millennial users.

Results:

ChatGPT wrote about 330 words, a little over the target but close enough. The writing felt like something you’d actually see in a market campaign.

It used easy, friendly phrases like “No dark patterns” and “Your health, your call.” It listed cool features like AI on-device processing, sleep tracking, heart rate variability, skin temperature, and stress insights. The description showed exactly why this watch stands out.

Perplexity wrote about 265 words and felt formal, reading more like a report than talking to millennials.

It oddly cited 16 sources, like SEC filings, showing it misunderstood this was a creative writing task.

But again I enabled the sec section when I gave first prompt and you cannot change those settings after first prompt.

Gemini’s response was the shortest, around 210 words, and came across as generic and lacking personality. It didn’t give me much to connect with.

DeepSeek wrote 220 words. It was more emotional, especially the phrase “when you can’t find the words.” The tone felt corporate though, and it didn’t explain the new technology much, so it didn’t grab the revolutionary feel.

ChatGPT clearly won on this round, tone, innovation details, and privacy. It talked about encryption, on-device data processing, and made a strong promise of “no selling data.”

Its call to action pushed urgency and exclusivity with “Pre-order now to secure launch pricing, unlock the Founders’ band, and start building a calmer, sharper you—one signal at a time.”

If you’re a student or creator getting into marketing or launching products, this shows how important it is to balance creativity with clear facts and audience connection.

ChatGPT nailed it, while the others felt either too short, too formal, or off-topic. For real campaigns, that makes a big difference.

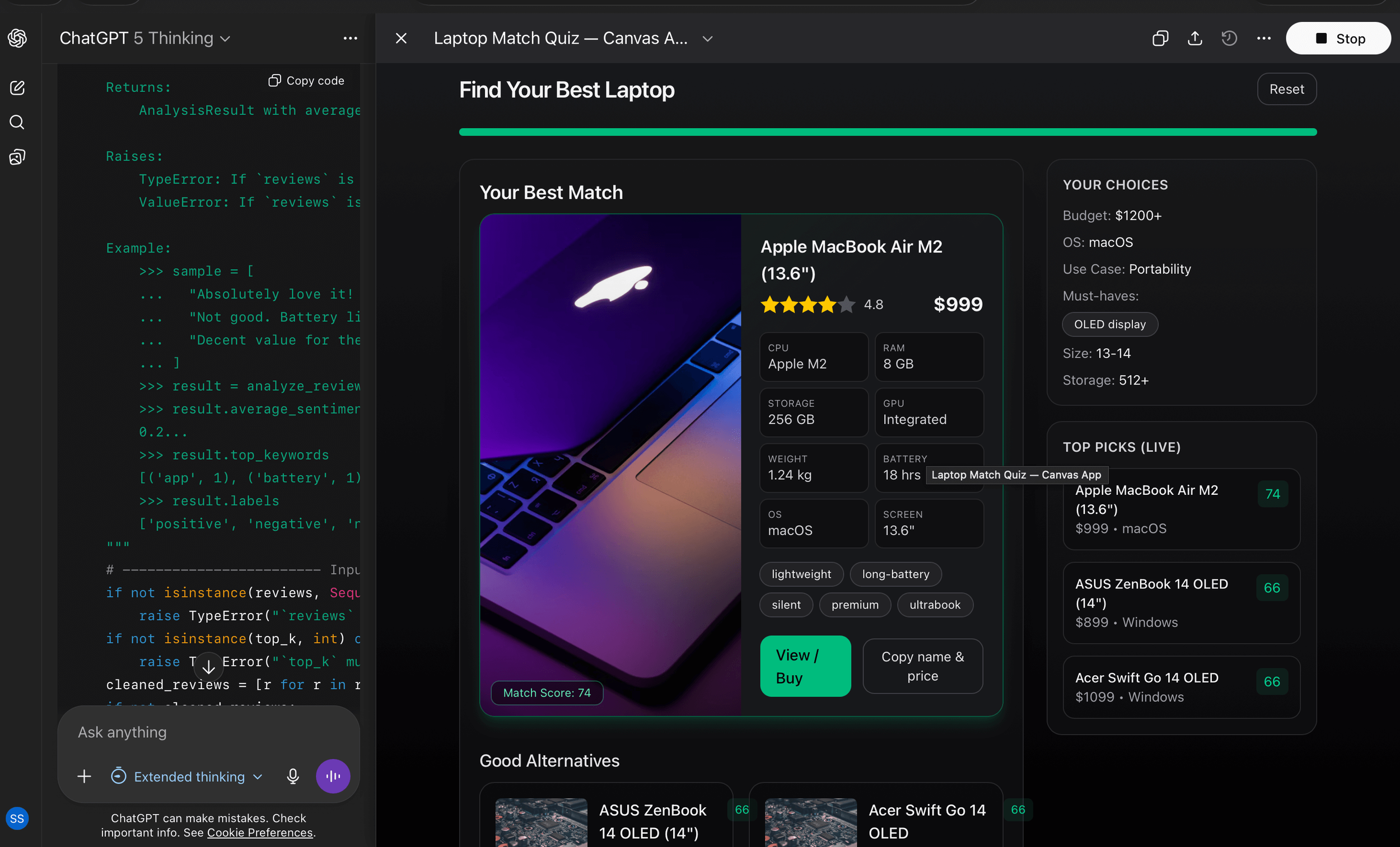

3) Practical Coding & Website Generation

I wanted to see which AI could really help students build a fully interactive quiz-style website inside Canvas.

The challenge was to create a site that asks users about their laptop needs, like budget and features, then shows the perfect match with an animated card revealing price, specs, rating, and a full summary.

Prompts used: Make a quiz-style website inside Canvas that asks students a few questions about their laptop needs (budget, preferred features, etc.). At the end, the site shows which laptop is the best match, with an animated reveal and a card showing all the info, price, and a rating.

You can see the results in the screenshots below

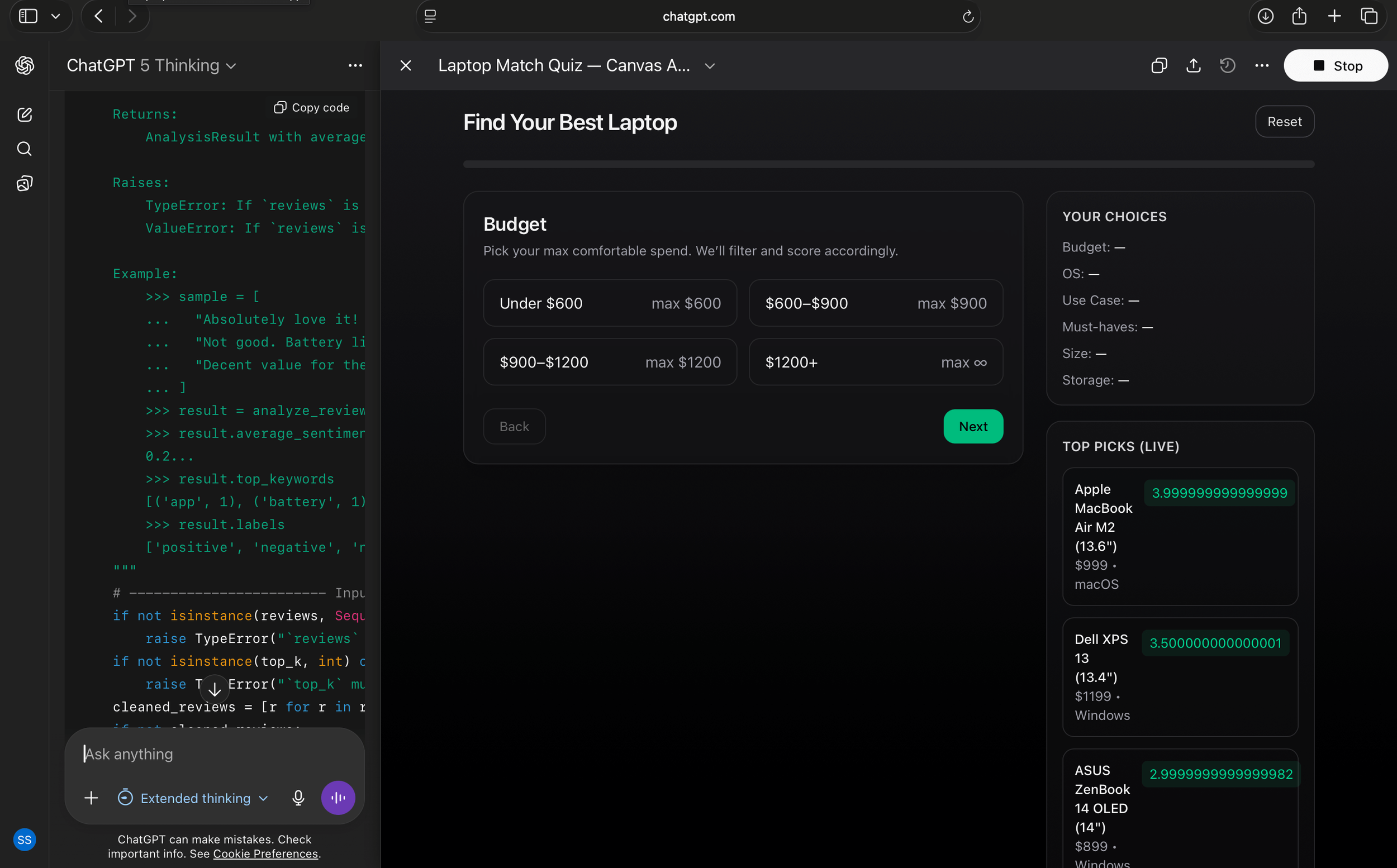

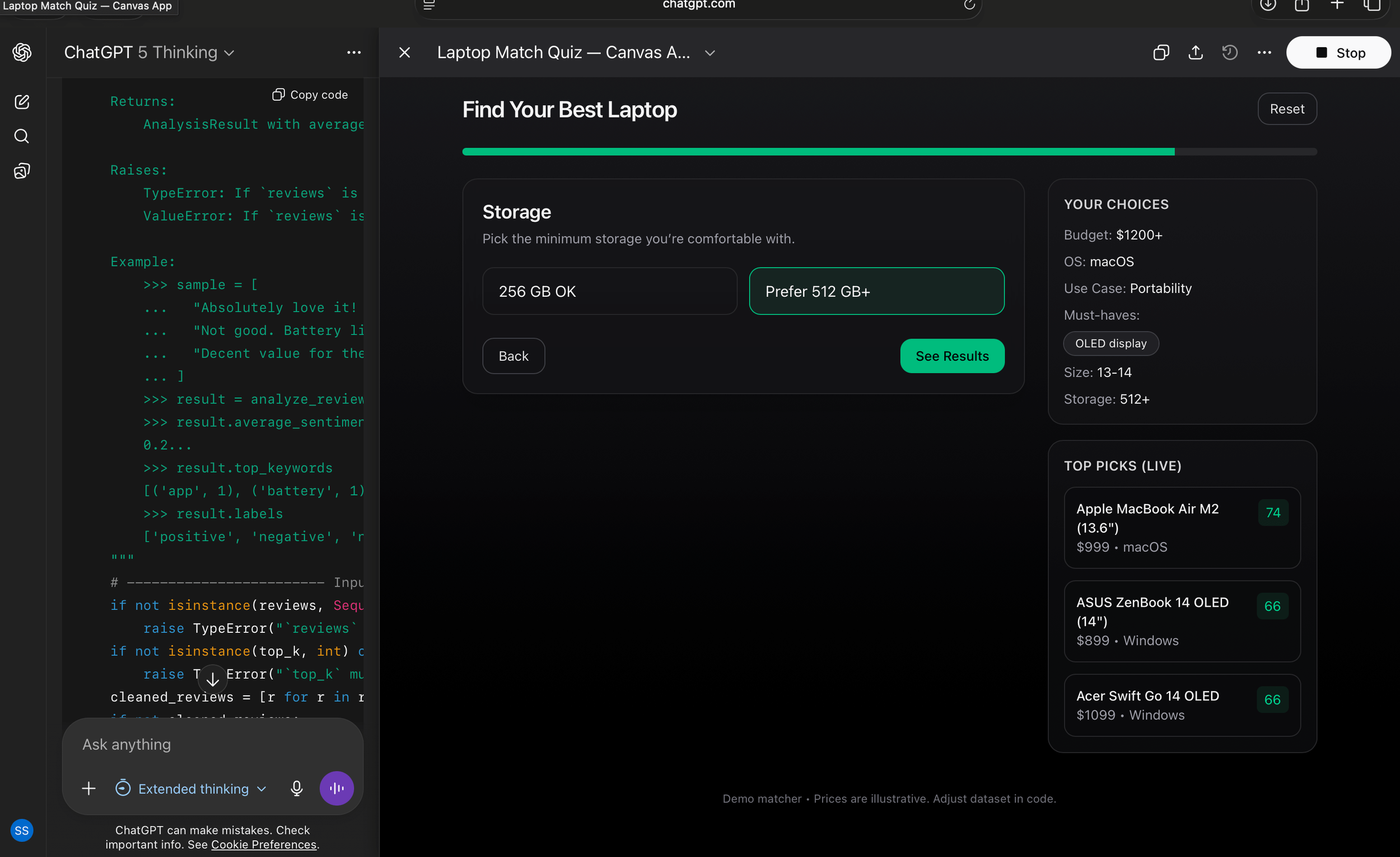

ChatGPT

Screenshot: ChatGPT laptop recommendation website

Screenshot: ChatGPT laptop recommendation website

Screenshot: ChatGPT laptop recommendation website

Gemini

Screenshot: Gemini laptop recommendation website

Screenshot: Gemini laptop recommendation website

Results:

DeepSeek and Perplexity didn’t offer any options for Canvas or web app code. So for web development students or creators, it didn’t give any real output.

ChatGPT was a clear winner by a mile. It didn’t just produce structured, detailed code—it even fetches real-time laptop data from the internet.

The site looked professional, modern, and actually runs inside Canvas. That makes it ideal for classes or student projects.

I was really impressed. ChatGPT’s response almost matched the design I imagined and built a fully functional site from scratch.

Whether you want instant recommendations, a sleek UI, or live features, ChatGPT’s coding skills blew the others out of the water.

The code was long, complex, but super clean making ChatGPT the best option if you want practical website automation.

If you’re a student or coder building quiz-style sites or interactive apps inside Canvas, ChatGPT not only delivers working code but lets you launch real projects you can demo and improve.

4) Math Problems

For this round, I gave each AI three different math questions. I wanted to test their calculation skills, knowledge of constants, and algebra understanding.

Prompts used: A train travels at 60 km/h. How long will it take to cover 180 km?, What is the value of π (pi) rounded to three decimal places?

You can see the results in the screenshots below

Results

Screenshot of results of Math Problems

Screenshot of results of Math Problems

All four AIs got the answers right, but how they explained things varied a lot.

For the first two questions, every AI gave the correct answers.

ChatGPT showed the full working step by step.

Perplexity was clear and concise, showing formulas and giving “3 hours” and “π ≈ 3.142.”

Gemini also got it right and added some extra info about rounding pi, which wasn’t really needed.

DeepSeek said “3 hours” and “3.142,” with some extra words.

For the domain question, DeepSeek was very detailed. It pointed out that x can’t be 2, showed interval notation, set notation, and explained it simply.

Perplexity gave the most complete answer it had all the notations plus a little explanation about why the domain matters, talking about vertical asymptotes.

That made it really helpful for learning. ChatGPT was correct but confusing at times it even went off on a tangent about a different version of the function, which might confuse students.

Gemini was clear and to the point, with interval notation and a simple explanation.

So, which AI did best?

They were all accurate. If you only want the right answer, any of these will do. In terms of clarity and efficiency, Perplexity and Gemini stood out. They explained things clearly without extra fluff.

DeepSeek gave more detail, which works well if you want to learn deeply but can feel wordy.

ChatGPT sometimes overcomplicates things, which might confuse beginners.

Perplexity’s extra note about vertical asymptotes was a nice bonus, helping you understand concepts better.

For everyday math homework or study, all these AIs will answer your questions. But if you want to learn fast and clearly, Perplexity and Gemini will help you most.

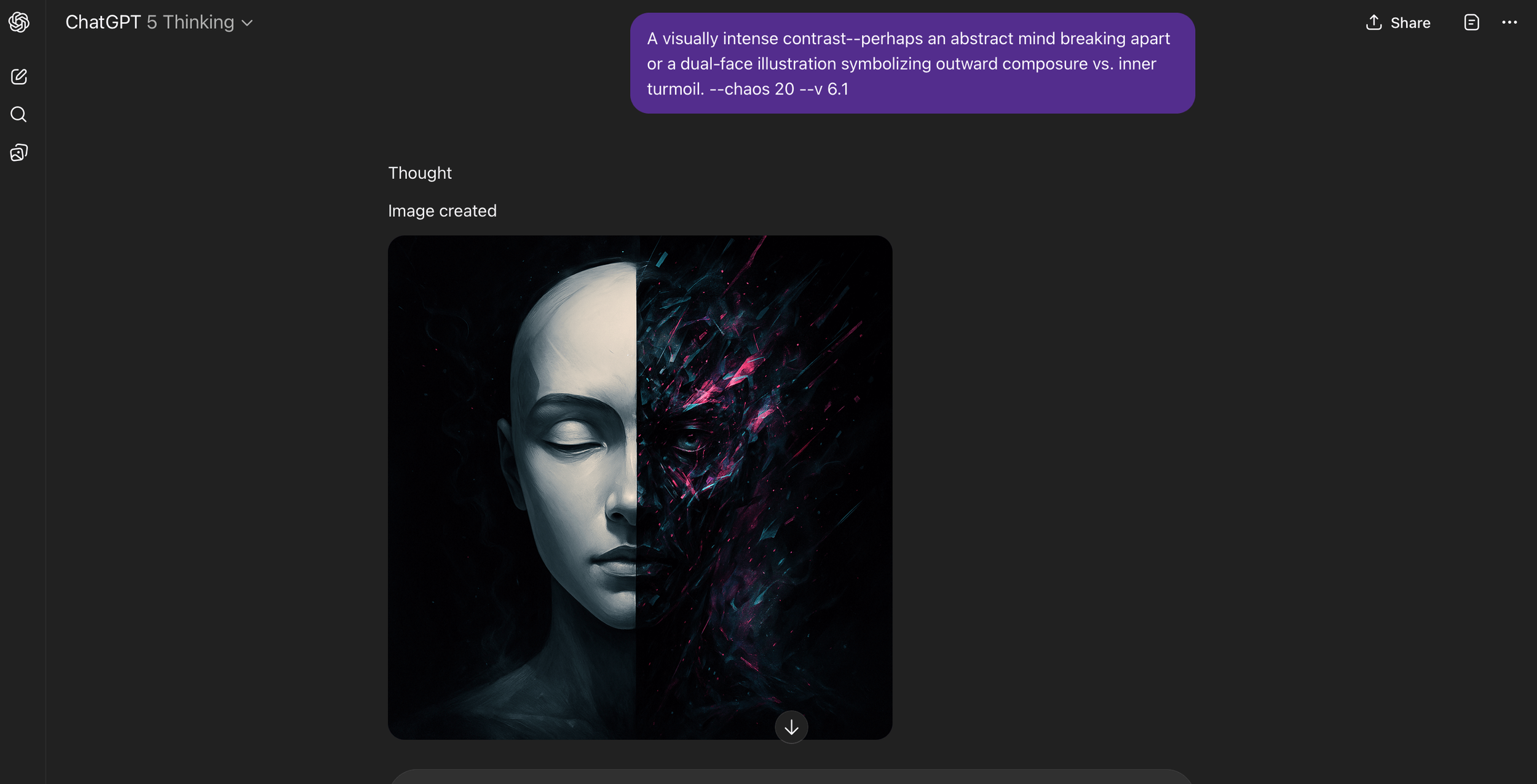

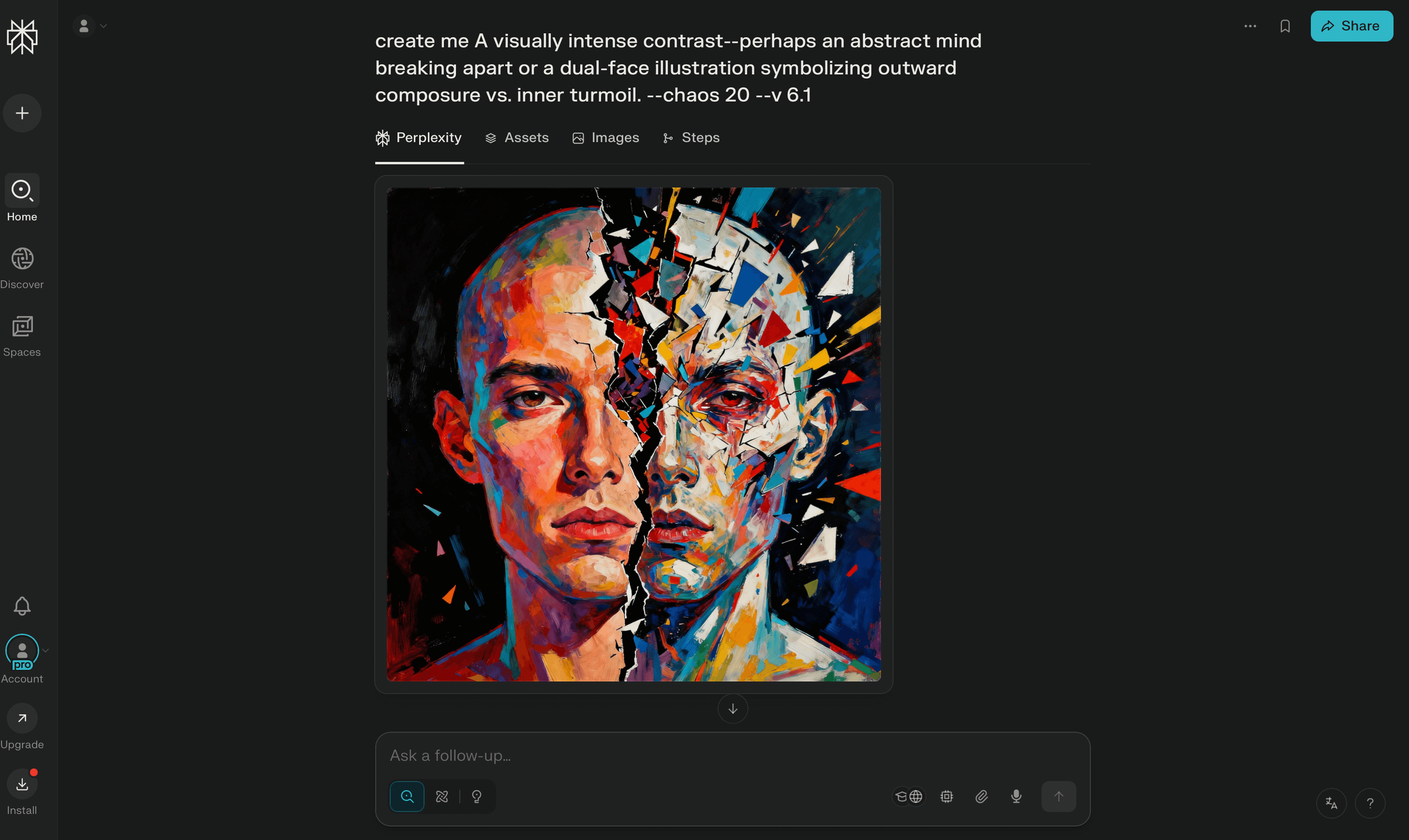

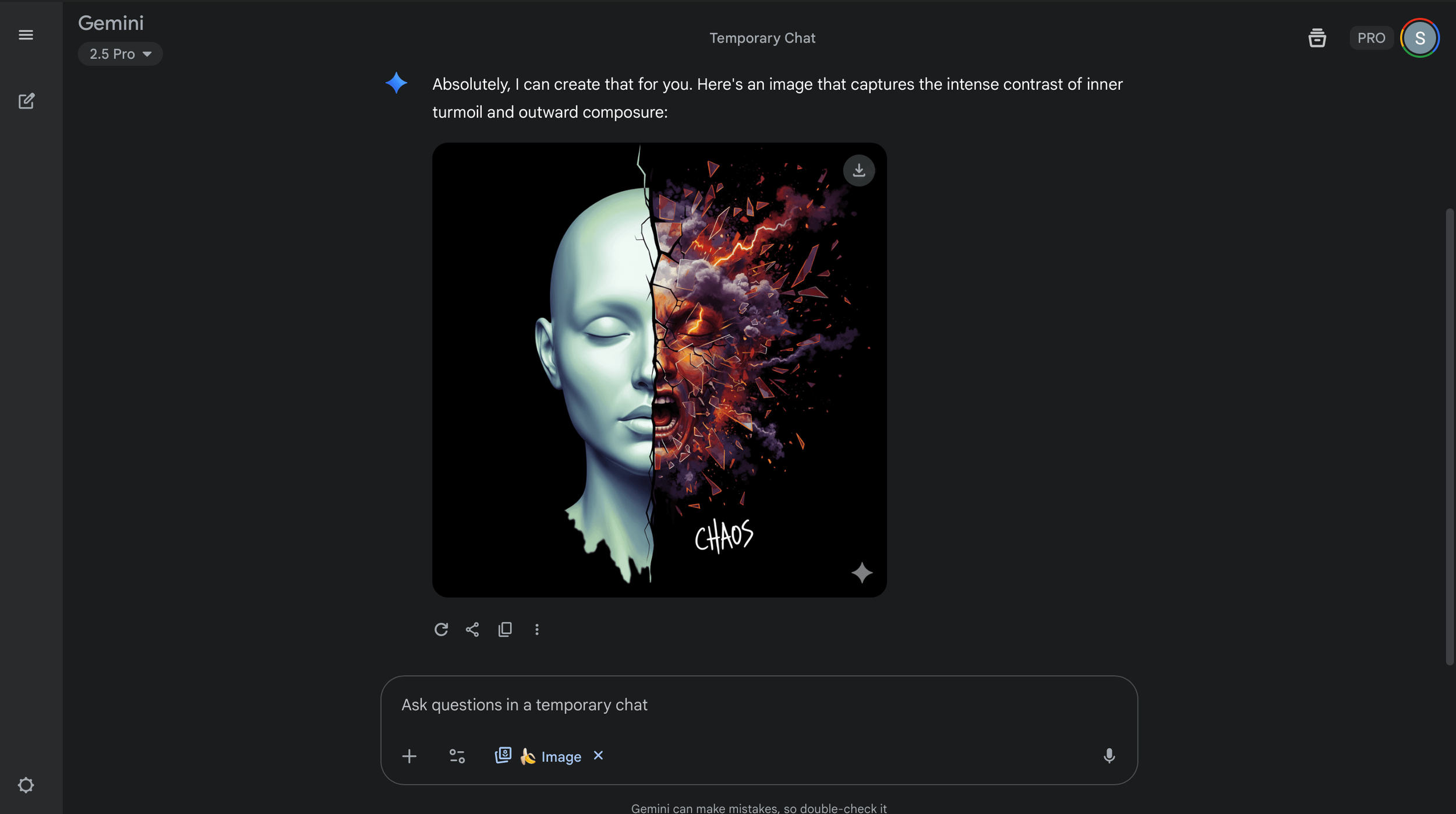

5) Image Generation

For this test, I asked each AI to create an intense illustration. The instructions were detailed and specific to test how well each AI understands creative direction.

Prompts used: A visually intense contrast--perhaps an abstract mind breaking apart or a dual-face illustration symbolizing outward composure vs. inner turmoil. --chaos 20 --v 6.1

You can see the results in the screenshots below

ChatGPT

Screenshot of results of image generation ChatGPT

Perplexity

Screenshot of results of image generation Perplexity

Gemini

Screenshot of results of image generation Gemini

DeepSeek

Screenshot of results of image generation DeepSeek

Results:

ChatGPT followed the prompt perfectly too. One face, visually split. The left side was calm, the right side swirled with chaos, using a moody color palette. The execution was sharp and matched what I had in mind.

Perplexity made a colorful, bold portrait with two separate faces side by side. One had warm tones, the other had explosive geometric energy.

I was surprised by the quality, but Perplexity misread the prompt. Instead of one face split down the middle, it showed two different people. Beautiful art, wrong concept.

Gemini nailed the idea. It showed one face with a clean vertical split. One side was calm and pale, the other burst with red and orange chaos.

It even added the word "CHAOS" as a stylish touch. This was dramatic, professional, and exactly what I asked for.

DeepSeek admitted upfront it cannot generate images and suggested external art tools. Honest, but not helpful here.

When creative intent matters, prompt understanding is everything. Gemini was the winner, best at understanding and executing creative prompts with dramatic effect.

ChatGPT came in close second with spot-on interpretation and professional results. Perplexity made stunning art but missed the core idea by showing two people instead of one split face. DeepSeek stays text-only, so it was not an option.

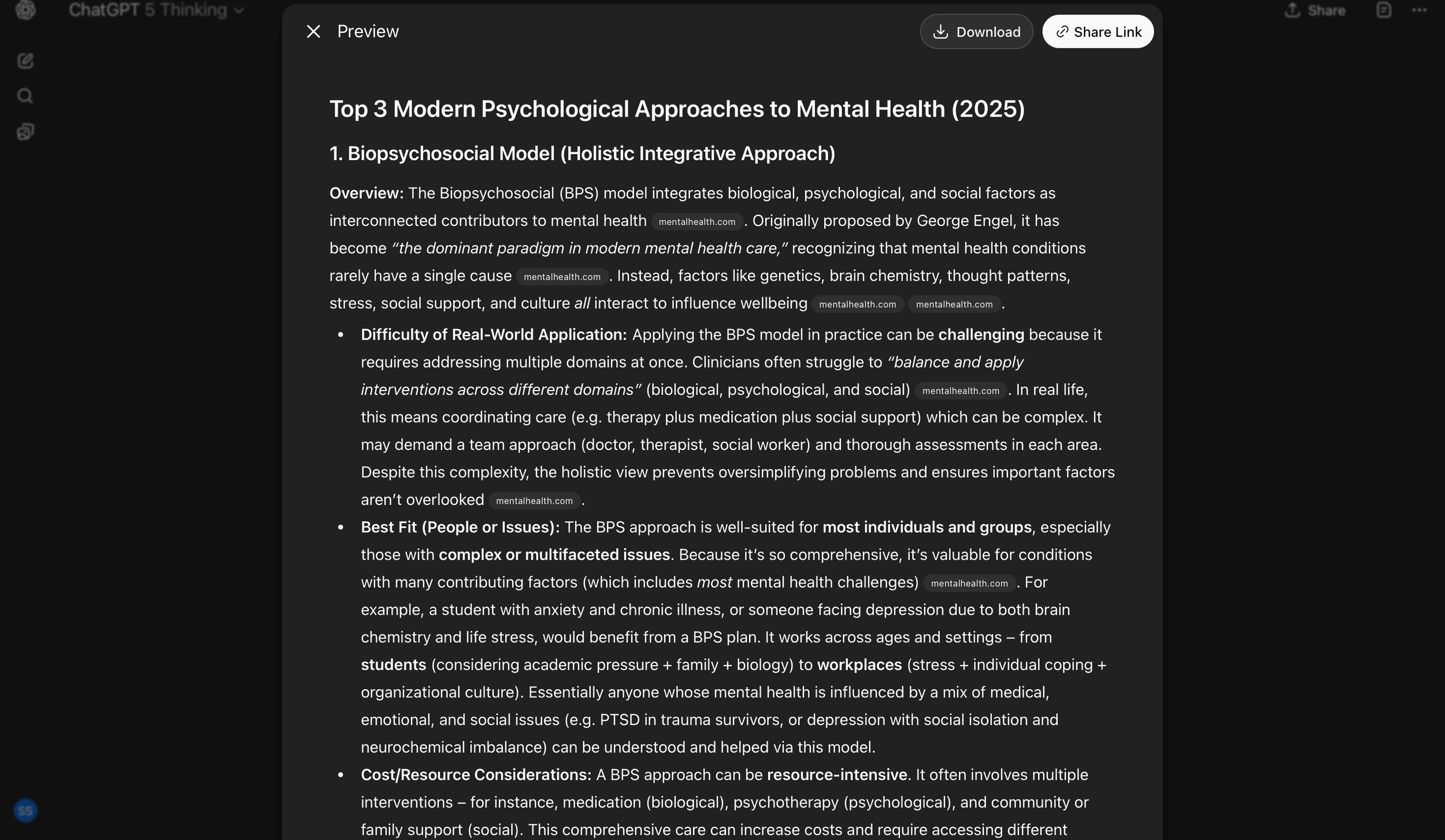

6) In-depth Research

For this advanced test, I asked each AI to research and compare the top 3 most influential modern approaches to mental health in 2025.

The challenge was finding up-to-date information and analyzing each theory's real-world difficulty, cost, best-fit populations, and offering actionable guidance for students or professionals picking an approach.

Prompts used: Research and compare the top 3 modern theories or approaches in psychology that are most influential for understanding mental health in 2025. For each theory or approach, provide: how difficult it is to apply in real life, what type of people or groups it works best for, any important cost or resource considerations, and real-world examples of success. At the end, synthesize this information into a clear recommendation framework for students or practitioners who are trying to choose the best approach for their needs.

You can see the results in the screenshots below

ChatGPT

Screenshot 1 of results of In-depth Research ChatGPT

Screenshot 2 of results of In-depth Research ChatGPT

Screenshot 3 of results of In-depth Research ChatGPT

Perplexity

Screenshot 1 of results of In-depth Research Perplexity

Screenshot 2 of results of In-depth Research Perplexity

Screenshot 3 of results of In-depth Research Perplexity

Gemini

Screenshot 1 of results of In-depth Research Gemini

Screenshot 2 of results of In-depth Research Gemini

DeepSeek

Screenshot of results of In-depth Research DeepSeek

Results:

ChatGPT started by asking clarifying questions about scope. Was I looking for therapeutic methods like CBT or broader models?

It then provided a detailed write-up using sources like mentalhealth.com and PubMed. The accuracy was high because it checked what I needed before researching. The downside was the extra back-and-forth added some delay.

Perplexity was the most impressive for pure research. You pick source types before searching: Web, Academic, Social, or Financial.

It pulled from 115 sources, far more than the others. The interface showed source cards and which journals were tapped.

It found the most current and wide-ranging data, making it reliable for academic or professional use. The output felt more like clear, organized notes than a polished report.

Gemini generated a beautifully formatted, ready-to-share report. It had a table of contents, an introduction on mental health trends, and a synthesis section.

It cited therapy blogs and mental health resource sites at the end for transparency. The output was readable and report-like, perfect for presentations or non-academics.

It relied more on web resources and blogs than deep, peer-reviewed journals.

DeepSeek could not do real-time research. It only has DeepThink mode, no web search. It created a professional-looking report but wrongly dated it in the future and listed theories out of sync with current timelines. The structure looked good, but the data was not up-to-date and sources were not visible or verifiable.

Perplexity is the clear winner for deep research. It finds more sources, accesses current and academic data, and gives you total transparency. If you need reliable, source-heavy insights for essays or professional work, pick this one.

Gemini is ideal for quick, well-formatted, shareable reports. Good for class presentations or summary guides.

ChatGPT offers solid, source-backed analysis. Great if you have specific angles or want extra accuracy, but expect a brief question and answer first.

DeepSeek is not suitable for real-time research. The data is outdated and unverifiable.

If you are a student, educator, or content creator needing up-to-date, deep comparative research on mental health or psychology, use Perplexity first for data collection and Gemini for report-ready formatting. ChatGPT works well for targeted, nuanced explorations with verifiable sources.

7) Paper Summarization

For this challenge, I used technical neuroscience paper and asked Gemini, ChatGPT, DeepSeek, and Perplexity to break down it into plain English.

The goal was making a complex research article accessible to students and regular readers by explaining the main question, methods, findings, real-world uses, limits, and future research.

Prompts used: Read and analyze the attached research paper.

Summarize the key research question, methods, results, and main conclusion in easy English.

List the most important findings or insights.

Mention any strengths, weaknesses, or limitations in the study.

Highlight practical implications or advice based on the findings.

Include any questions for future research if stated.

Make the summary clear and useful for students and non-experts.

You can see the results in the screenshots below

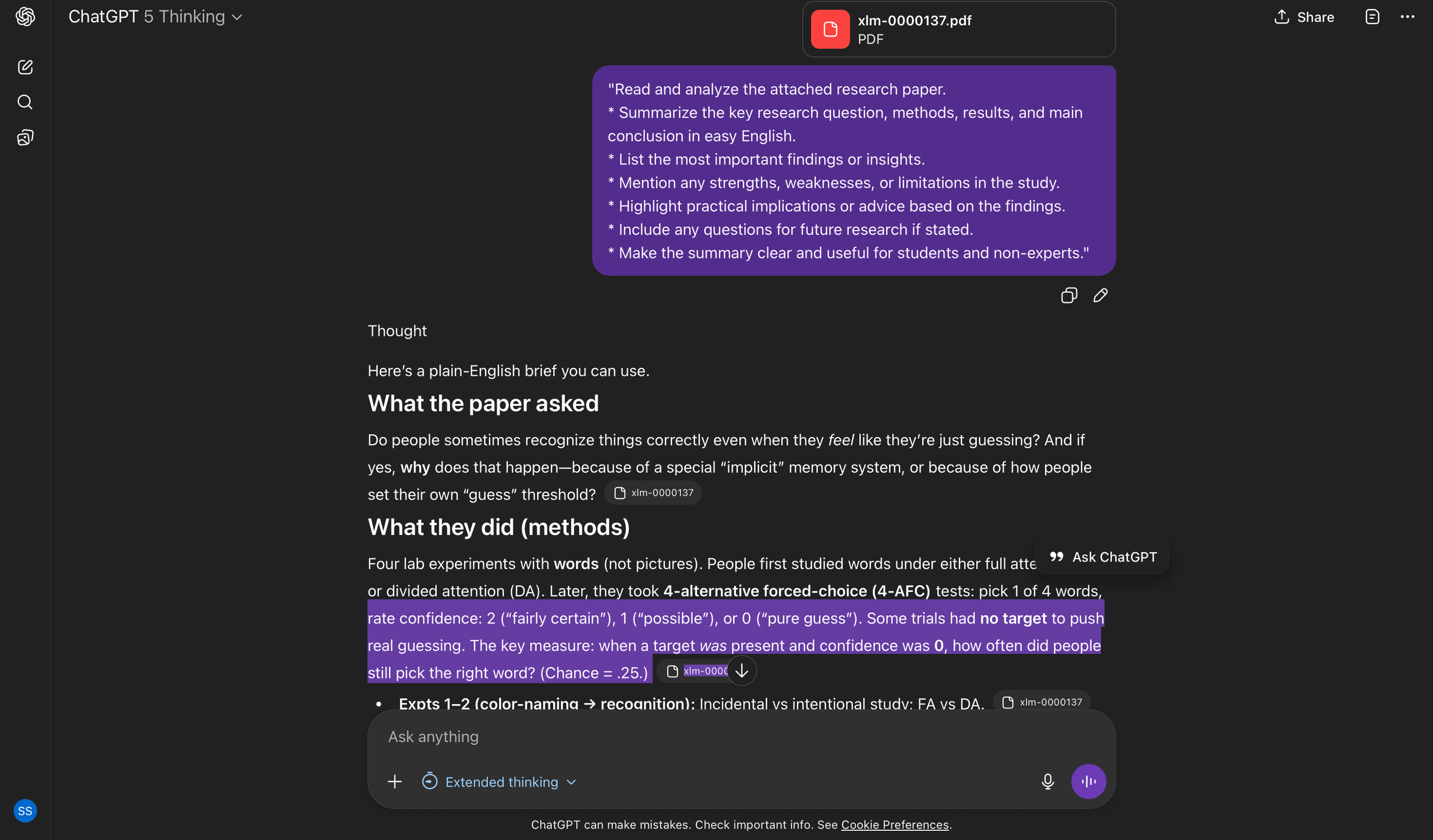

ChatGPT

Screenshot 1 of results of Paper Summarization ChatGPT

Screenshot 2 of results of Paper Summarization ChatGPT

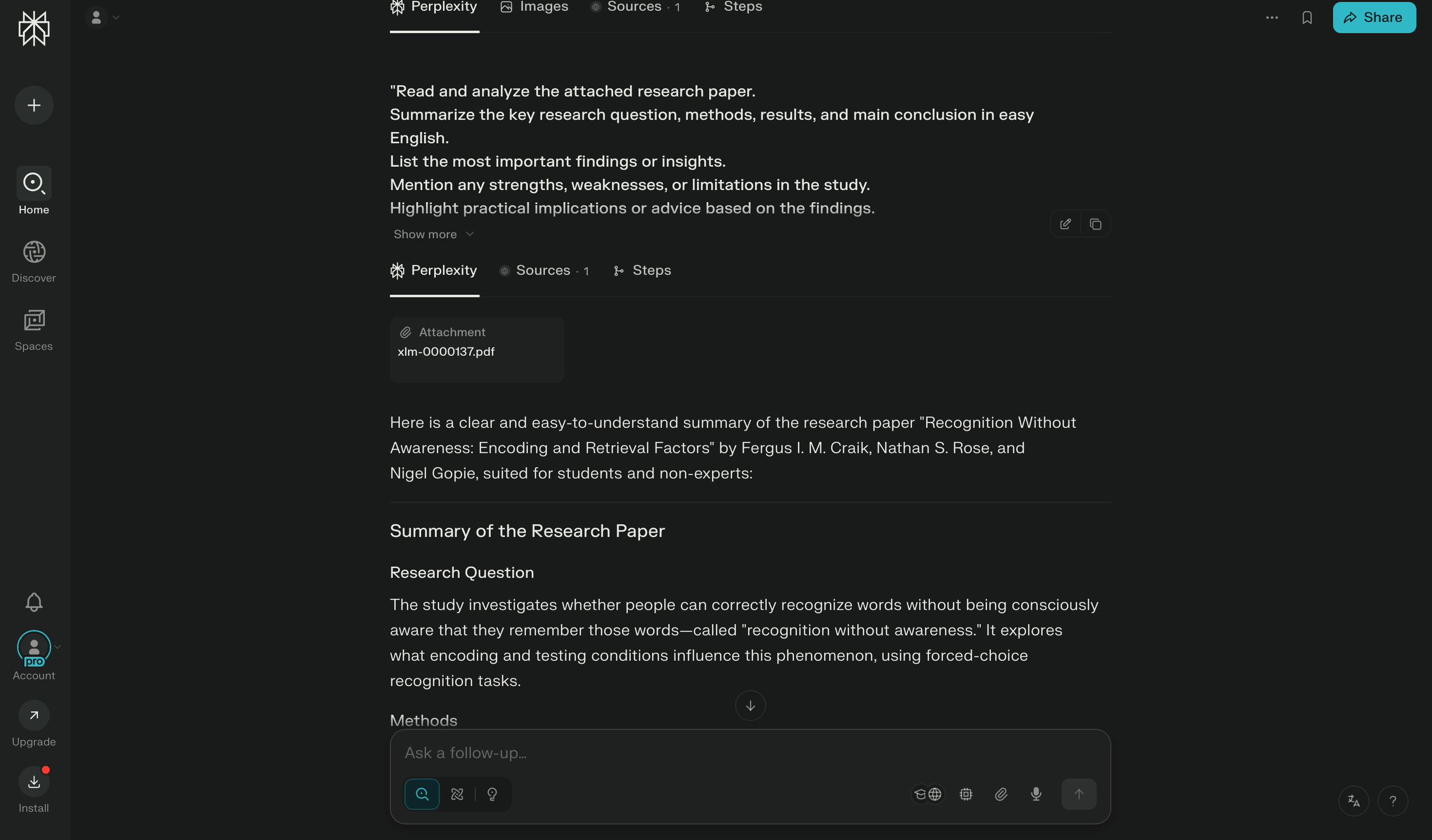

Perplexity

Screenshot 1 of results of Paper Summarization Perplexity

Screenshot 2 of results of Paper Summarization Perplexity

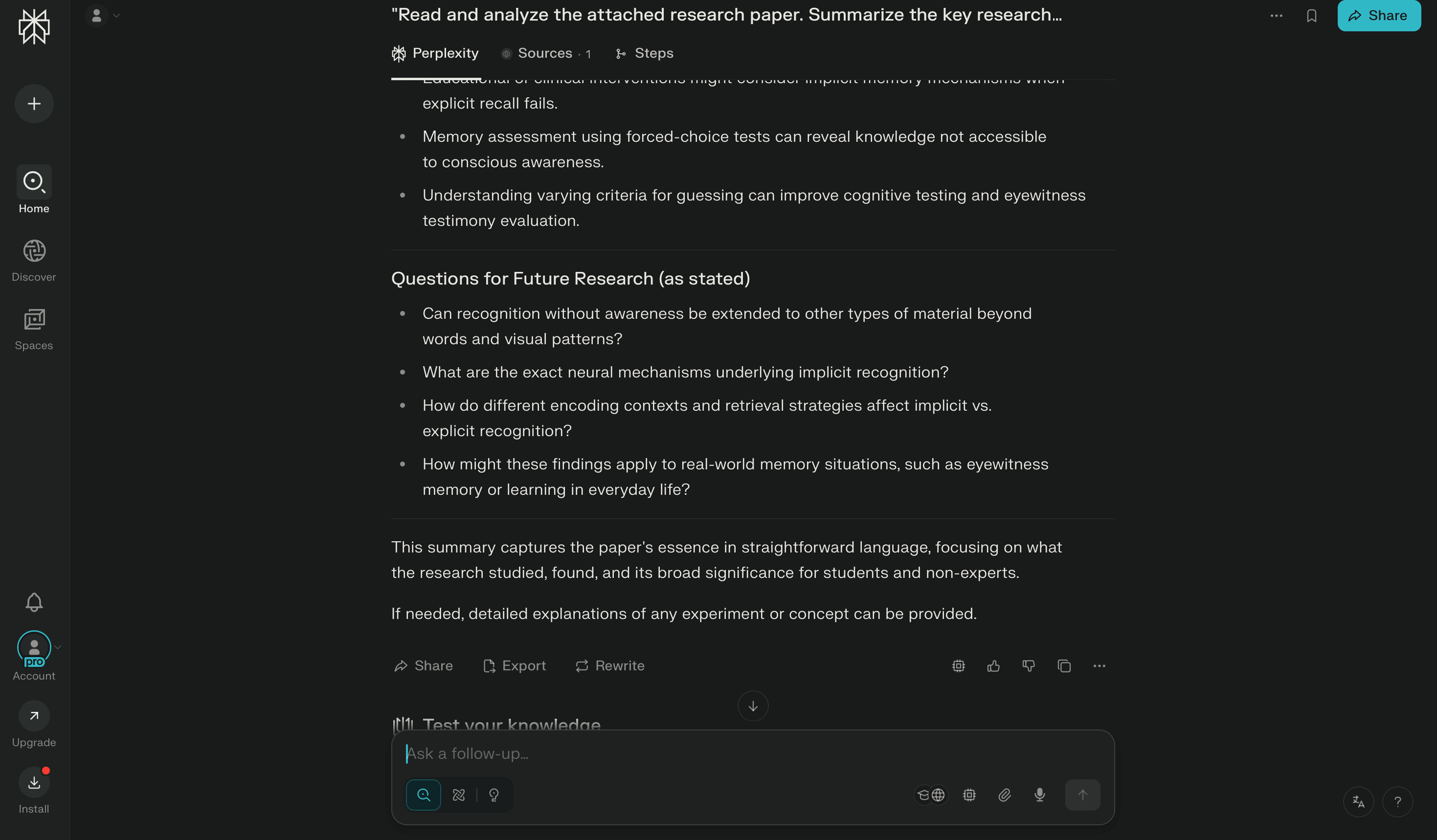

Gemini

Screenshot 1 of results of Paper Summarization Gemini

Screenshot 2 of results of Paper Summarization Gemini

DeepSeek

Screenshot 1 of results of Paper Summarization DeepSeek

Screenshot 2 of results of Paper Summarization DeepSeek

Results:

ChatGPT used formal headers and numbered sections, reading more academic. The tone was accessible but packed with details, offering deeper dives and specific experiment examples.

It covered everything thoroughly and offered to make a one-page student handout for extra help.

Perplexity gave short bullet points, straightforward and direct.

The tone was simple and clear. It covered all required parts but with less explanation. It briefly noted mindful guessing as a valid test strategy.

Gemini gave clear sections with short bullet points. The tone felt friendly and conversational, using everyday examples like "you don't always need to be sure to be right."

It covered all the parts I asked for and added a "Summary Tip" at the end with practical advice for students.

DeepSeek used clear sections with bold headers and opened with a "Big Question" framing. The tone felt conversational and easy to relate to right away.

It matched all the points I asked for and gave strong real-world advice, emphasizing examples like test-taking, decision-making, and understanding memory in daily life.

DeepSeek wins overall. It blends approachable explanation, practical relevance, and logical flow perfectly.

The "Big Question" framing makes research instantly relatable, and practical examples help students see how to use the findings in their own lives.

Gemini works best for student-friendly summaries. It uses a friendly tone and simple advice, great for younger students or anyone new to research.

ChatGPT works best for deep understanding. Its detailed analysis and option for a summary handout make it ideal for students or teachers needing more context.

Perplexity is fastest. It delivers quick bullet-point summaries, handy for referencing when you need the main points fast.

If you are a student or teacher breaking down research papers quickly and clearly, all four AIs work, but DeepSeek and Gemini make complex ideas accessible and actionable.

ChatGPT fits those who want academic-level detail. Use Perplexity when time is tight and you only want the essentials.

For student-friendly research digests and making technical papers approachable, combine DeepSeek or Gemini for clarity with ChatGPT for deep understanding.

This maximizes both learning and practical use in your own studies or resources.

conclusion

In summary, the best AI tool depends on what you need.

Perplexity Pro is good for deep research and factual answers, Gemini Pro (nano banana) shines in creative and image tasks, and ChatGPT Plus (with DeepSeek as backup) is your top choice for coding or building solutions.

Every platform brings its own strengths to the table—pick your tool based on the job, and you’ll get the best results every time.

Don't miss my guides on unlocking Perplexity Pro and Gemini Pro at zero cost for students. Check out my posts for step-by-step instructions and start saving today—your AI-powered workflow just got a whole lot smarter.

FAQs

Q.1 Can you trust AI answers?

AI answers depend on the data and sources they use.

Research shows ChatGPT makes 45% fewer factual errors with newer models, but still hallucinates about 4.8% of the time.

For important questions or research topics, always check the information yourself and look for cited sources. Treat AI answers as starting points, not final truth.

Q.2 Do AIs cite real academic research?

Some tools like Perplexity Pro and ChatGPT cite academic research, journal articles, and official sources. This works best if you use research mode or ask for citations directly.

Always review the links or studies provided. Studies show only 14% of AI-generated citations link to real, verifiable sources, so double-check them.

Q.3 Which are best for blog writing or freelance work?

ChatGPT Plus works well for drafting, editing, outlining, and generating ideas.

Gemini Pro produces friendly, creative content with strong formatting and visual support.

Perplexity Pro works best for quick fact-checking and pulling references.

Q.4 How to improve AI answers for your workflow?

Give detailed prompts and clear context.

Tell the AI what format you want, like lists, tables, or summaries.

Ask for citations or references when you need them.

Refine answers by requesting edits or clarifications.

Q.5 Tips on prompt engineering for better results

Be specific about your needs. Say things like "Summarize in 100 words," "Include links," or "Make this student-friendly."

Break big tasks into small, step-by-step prompts. Add keywords or limits like "no technical jargon" or "for beginners."

Try different phrasings or follow-up questions if the first result does not work.